Vjacket

Wearable controller, performance, VJing, image manipulation.

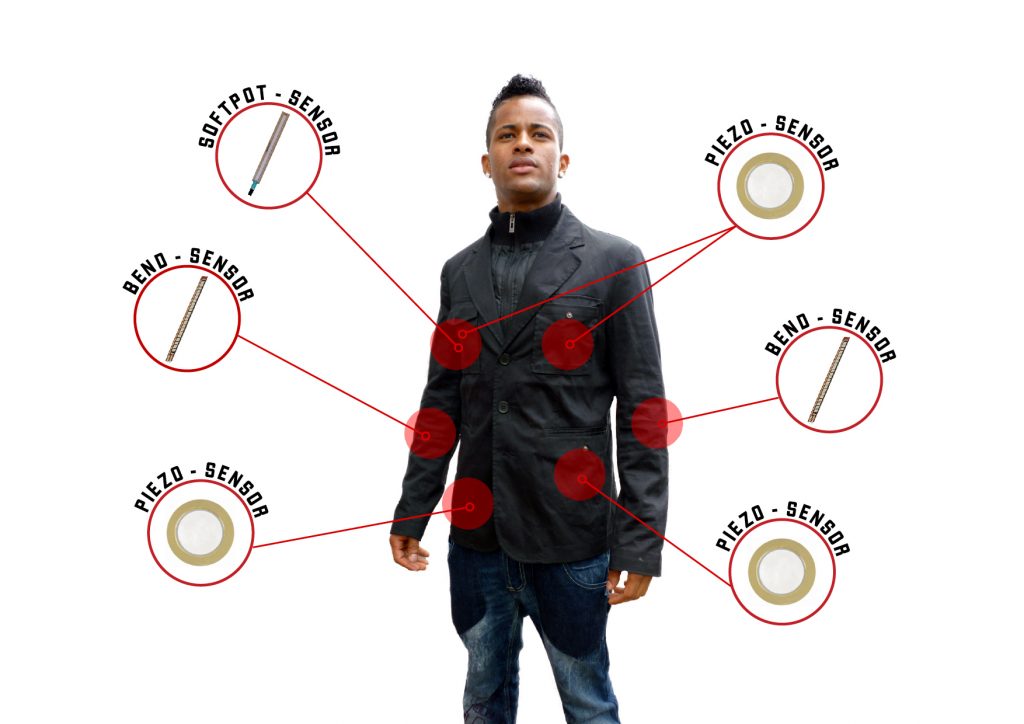

The VJacket is a wearable controller for live video performance. Built into the jacket are bend, touch and hit sensors which you can use to send OpenSoundControl or MIDI messages wirelessly to the VJ program of your choice, letting you control video effects and transitions, trigger clips and scratch frames all from the comfort of your own jacket.

The Arduino2OSC bridge interface we developed for Arduino projects is customizable to send any type of OSC message to any program, including other video or audio programs, such as Resolume Avenue, Arkaos Grand VJ, Max/MSP/Jitter, Reaktor, Abelton Live, Propellerheads Reason, Supercollider, Kyma, Processing, OpenFrameworks, etc. Thus you can use the VJacket to not only control video, but sound and other networked interactive media as well. All the code and hardware design is open source so others can make their own wearable controllers to contribute to the growing wave of performative fashion.

The Vjacket was developed at The Big South Lab in Rotterdam, NL by Tyler Freeman and Andreas Zingerle.

Publication:

Freeman, T., Zingerle, A., “Enabling the VJ as Performer with Rhythmic Wearable Interfaces“, ISEA 2011, Istanbul, Turkey.